How can you know whether a learning experience (a course) teaches what it was intended to teach? Picking up from my previous article, how do you know whether all of the participants had equally successful experiences? How can you measure learning if test scores, a record of learning activities and objectives completed, and subjective self-reported qualitative ratings are not reliable measures?

Criterion measures, administrative records, and SCORM don’t actually measure learning and they don’t provide reliable information upon which to improve learning experiences. What can we do about the situation?

xAPI

The Experience API (xAPI) is a data standard for reporting learning activities. It supports three critical tasks:

- Identification of places where more learning interventions might be needed;

- Measurement of the impact of blended learning experiences; and

- Documentation of places where learning experiences could be improved.

xAPI makes it possible to measure learning and cross-compatibility of learning applications. Most eLearning still uses multiple-choice tests to assess learning. This opens the way for a proven and reliable method for dealing with item variability: Item analysis.

Item analysis details

Item analysis was (and still is) a statistical method for improving some types of criterion tests (multiple choice) in order to adjust test items to a uniform level. In other words, to assess what students have learned and the reliability of the tests, item analysis does the work. This can be done using a spreadsheet. Today there are also apps that do the work, and xAPI will help by collecting all the learner responses. These tools are built around major concepts related to item analysis including validity, reliability, item difficulty, and item discrimination, particularly in relation to criterion-referenced tests. (How to make better tests.)

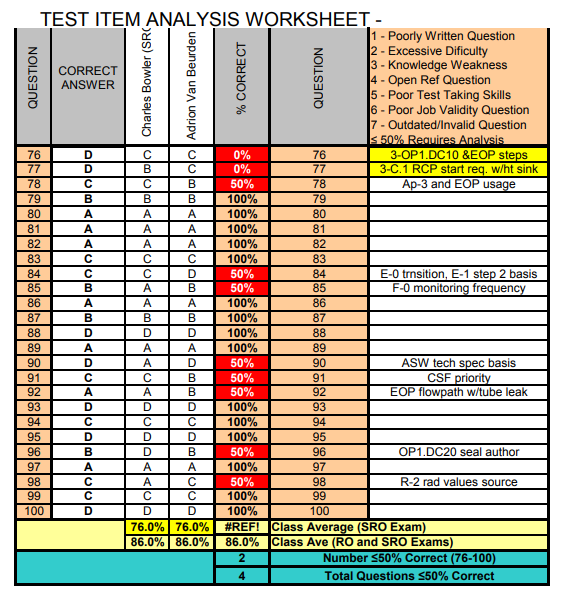

Figure 1: A sample test analysis worksheet

Test item analysis worksheet

There are worksheets for particular approaches to test item analysis available online. Each of these serves as a model specifically for a particular approach to a criterion test. These worksheets are simply basic templated documents modeled after EXCEL or similar software such as this worksheet (Figure 1) from the NRC. I am using the NRC document in this article because I find it simpler and easier to figure out than others. Please note that Figure 1 shows only part of the worksheet.

Most item analysis worksheets have similar structures and functionality. In this article I refer to columns according to their positions in the worksheet, top to bottom, left to right.

Column A of Figure 1 indexes criterion items (test questions) developed from the course or experience objectives. Each criterion item statement should be simple, clear, and unambiguous; the choices for each criterion item should also be clear, based on the criterion and the instructor guide. It is best if there are no duplicate or misleading choices (“Only A and B”, “All of the above”, “None of the above”, and so on).

Column B should be used to record the consensus of the subject matter expert(s) as to the correct answers. The SMEs are named at the top of Column B. If there is only one SME involved in the development of test items and criterion answers, columns C and D can be removed from the worksheet (or left blank).

The test item worksheets can be programmed for as many subject matter experts as desired to provide “correct” answers in the feedback (Columns C and D). Two SMEs are probably easier to guide to consensus.

Column E contains some very easy math: the percentage of correct answers according to the SME(s). If you have two SMEs on this task, you will need to decide which one will be the reference for the percentage. If you only have one SME, that task is easier. In Figure 1, the form designer decided to highlight the code percentages below 50% by changing the cell to red.

Column F just repeats the question numbers. This helps highlight errors when you are dealing with a long list of questions.

At the top of Column G there is a small table of code letters to identify the analysis of the SME(s) as to what they found to be the reason for the incorrect answer. Below that in column G are the SME’s notes on the items that were at or below 50% correct. The documentation can be as verbose or as terse as local policy requires. The last four lines of the worksheet will help the test developers identify the test difficulty.

Columns E and G will highlight test items that should be rewritten or eliminated.

And there you have a completed item analysis!