Think about one of your eLearning projects; perhaps it’s compliance training or product knowledge. What metrics are you using to determine if your program will be successful?

If you don’t know the metric you’re trying to improve, then you’ll never have a clear picture of whether you improved it. Consequently, you can never actually know if you are succeeding or failing.

This isn’t just your issue—it’s an industry-wide challenge.

At Neovation, we believe there are three reasons that eLearning initiatives fail:

- Using course completions to measure success

- Delivering annual training as a retention strategy

- Not measuring the actual impact of training

1. Using course completions to measure success

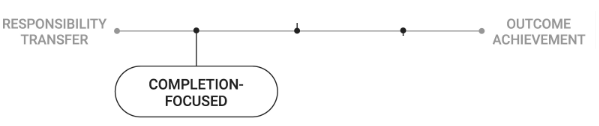

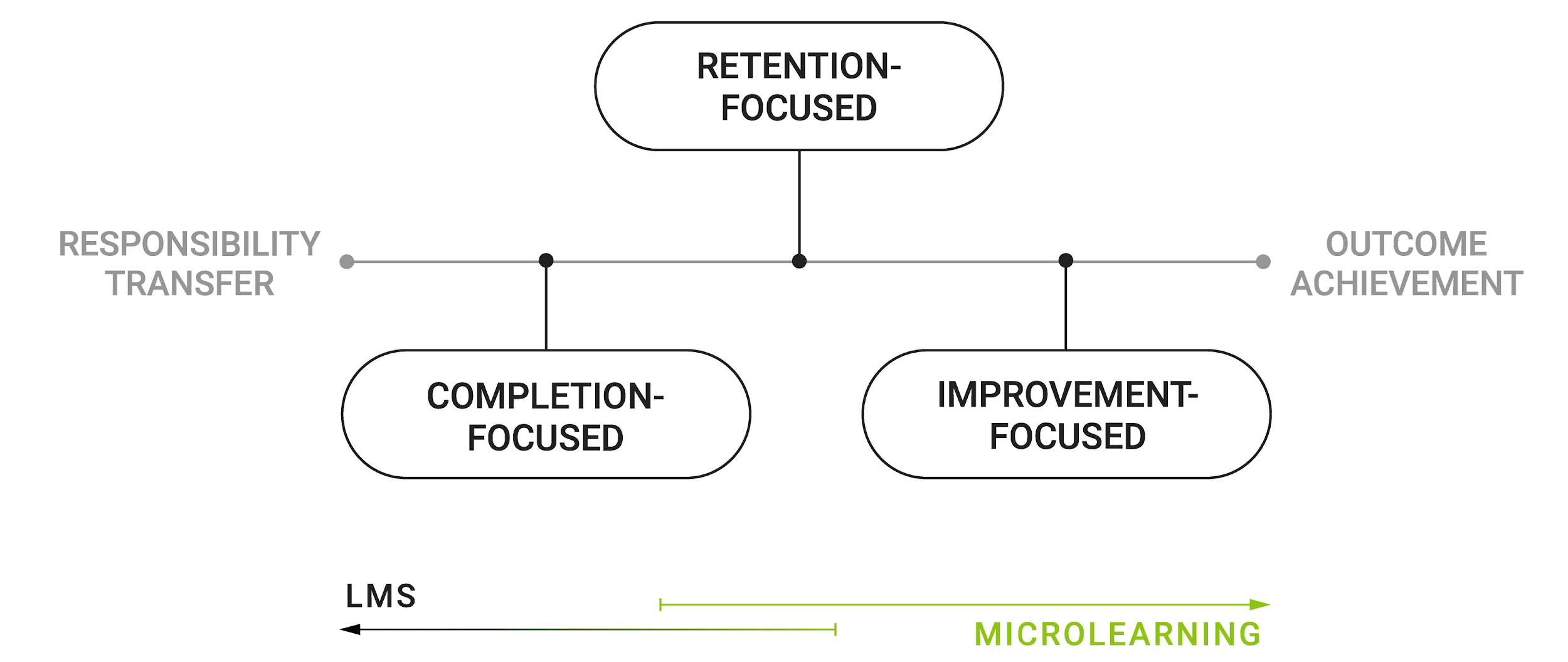

Figure 1: The Training Intent continuum demonstrates a range of intentions for delivering a training program, from transferring responsibility to achieving desired outcomes

The easiest (and thus most popular) metric to focus on is whether learners are completing their assigned training modules.

In addition to a focus on tracking training completions, you might also ask learners to acknowledge that they’ve received and understood the training. In reality, this uses training as a way to transfer responsibility to your learners. You can now hold them liable if they behave in a way contrary to the training they received.

There are two obvious problems with this approach: Lack of knowledge retention and persistent liability.

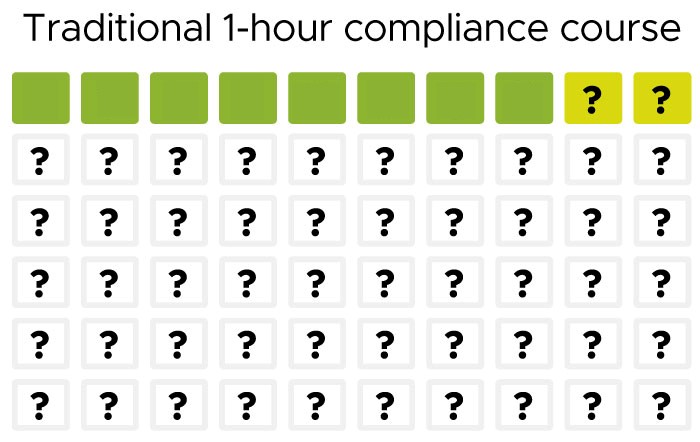

The lack of knowledge retention should be obvious. Imagine that a learner completes a one-hour module and “passes” by achieving a score of 80 percent on a 10-question quiz.

Within the hour-long module, you probably exposed that learner to at least 60 pieces of information. But, you’ve only quizzed them on 10—and of the 10, they only needed to answer eight correctly. That ignores the open secret that learners simply bang their way through many courses and guess their way through the quizzes, and that many quizzes allow multiple retakes.

You end up with a completion record, but the learner may only have knowledge on eight out of 60 points, or 13 percent of the material.

Figure 2: Completing training doesn’t ensure knowledge

If they understand only 13 percent of the material, then there is a potential 87 percent knowledge gap. Your completion record provides a false sense of security.

You’ve also failed to transfer liability, in any meaningful way, to the learners. It used to be sufficient to show that learners had completed training to deflect liability issues, but that may be changing. Regulators are starting to look past compliance rates. In the Principles of Federal Prosecution of Business Organizations, section 9-28-800, the US DOJ specifically calls for prosecutors to “determine whether a corporation’s compliance program is merely a ‘paper program’ or whether it was designed, implemented, reviewed, and revised as appropriate, in an effective manner.”

2. Delivering annual training as a retention strategy

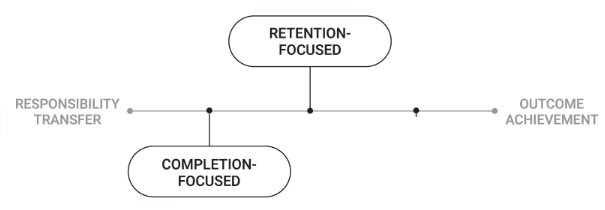

Figure 3: Retention-focused training on the Training Intent continuum

Organizations often deliver annual training campaigns, especially for mandatory or compliance training.

While delivering annual training certainly has some retention benefits over a once-and-done campaign, there are serious flaws with using this approach to improve learner retention of the material.

- Learners have 11+ months of the year to forget the material.

- Learners feel they’ve “done the course before,” so they skip quickly through the material.

- The same knowledge gaps may persist year after year.

3. Not measuring the actual impact of training

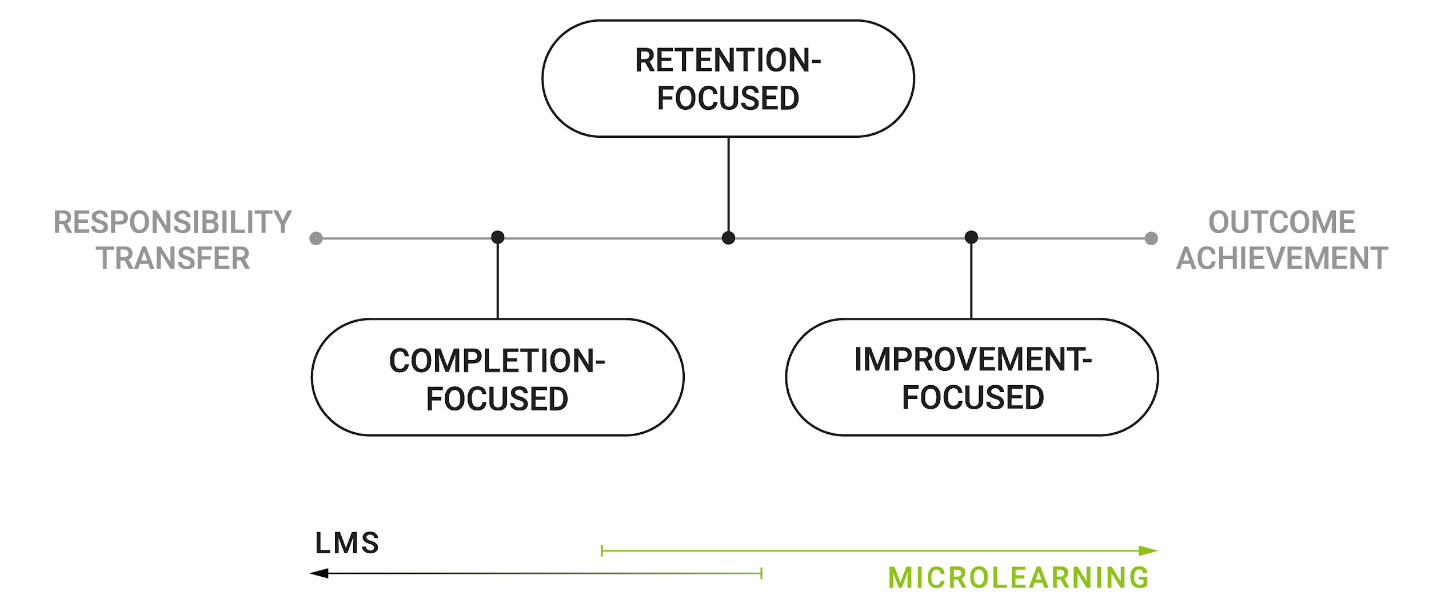

Figure 4: Improvement-focused training is closer to the outcome achievement end of the Training Intent continuum

Without clear, relevant metrics to target, training is often simply an exercise in transferring responsibility to the learner, instead of focusing on achieving particular results.

But, according to a recent eLearning Guild research report, Evaluating Learning: Insights from Learning Professionals, more than 80 percent of organizations measure learner attendance and/or training completion, and 72 percent measure learner satisfaction. Only very small minorities of organizations measure learners’ ability to apply what they’ve learned either during training or on the job.

Process improvement experts from Peter Drucker (“What’s measured improves”) to Scott M. Graffius (“If you don’t collect any metrics, you’re flying blind”) agree that measuring key metrics, and focusing on improving them, is critical to actually achieving desired results.

Depending on your situation, key metrics you want to improve may include:

|

Contact Center Metrics |

First contact resolution |

|

Health & Safety Metrics |

Incident rates |

|

Retail |

Average purchase value |

It’s easy to fix these problems

An improvement-focused eLearning campaign—where you are focused on achieving your desired outcomes—is the best way to improve your identified metrics. Here are five steps to take right away:

- For every eLearning project, determine up to three metrics you want to improve.

- Deliver initial training to provide your learners with an orientation to the content. Know that, after initial training, they will still have knowledge gaps.

- Following initial training, deliver a microlearning-based long-term retention campaign to close the knowledge gaps.

- Measure impacts on your target metrics.

- Correlate metrics and learner knowledge analytics to create a positive feedback loop which evolves the training content over time, and achieve even better results.

Figure 5: A blended strategy of training plus support can cover the entire Training Intent continuum

In conclusion

A retention-focused microlearning campaign is best delivered through short daily training sessions. Sessions greater than two minutes will often be put off by learners, in an attempt to “schedule” the time. Therefore your daily sessions should be two minutes or less in duration.

A retention-focused campaign should use adaptive algorithms that leverage spaced repetition and interleaved learning in order to maximize retention.

By identifying the key metrics you want to improve, leveraging microlearning to complement your initial training, and creating a positive feedback loop to improve your content based on actual impacts on your metrics, your training program will be more successful.